Search is not dying. It is already dead. And it is taking the Open Web down with it.

Looking for something

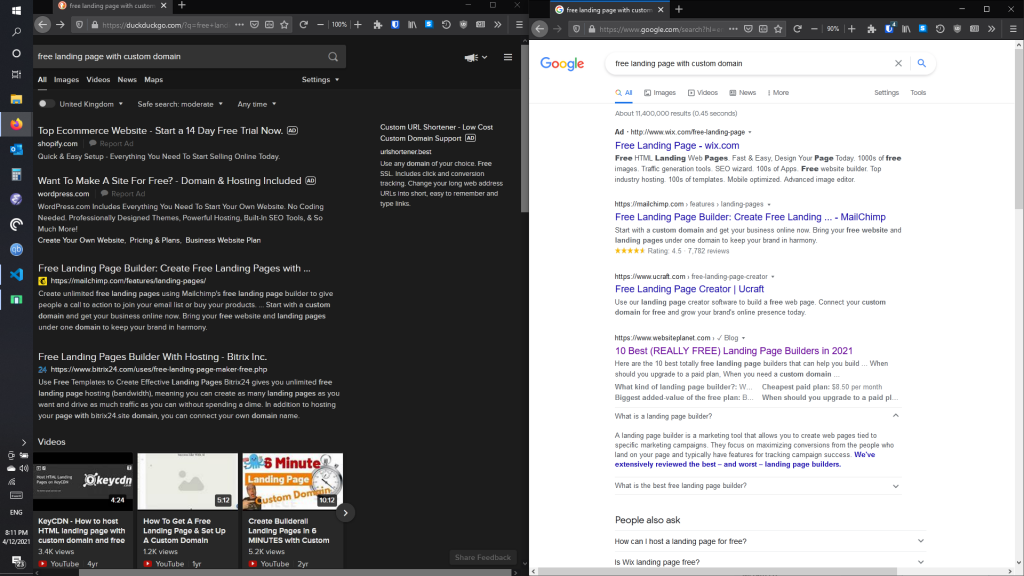

When I was looking to host a landing page for my Startup, MakThis, I was looking for a specific solution. Like most startups at the super-early stage, I did not have any capital to allocate to something like a landing page. The usual WYSIWYG solutions like Wix, and SquareSpace required you to pay before you can attach your domain. It took me hours of searching to arrive at the fact that GitHub, where I already host my code, allows simple HTML/CSS/JS landing pages, appropriately titled GitHub Pages. There are whole infrastructures based around providing themes and setups for this service. This is exactly what I was looking for.

So why was it so hard to find?

Bamgoogled

I do not use Google as my primary search engine. I have not for almost the past decade. I use DuckDuckGo. DDG is a privacy focused search site that introduced the Instant Answers innovation. They also allowed me to search google by just adding !g to the query. The instant answer were the main reason I switched. Google soon followed by giving users Instant Answers as well. I stuck with DDG because of the privacy features. And the instant answers were just as good as Google’s.

Except they turn the search product from a keeper of knowledge serving you the most relevant sources and allowing you to make your own , to an oracle, serving you sage answers for your query. On the surface, this seems like a great idea for the users; you get the best answers immediately, without waiting for another web-page to load. In practice it can lead to misinformation, even on the trivial stuff like Dishwasher efficiency. It also gives legitimacy to fluffy listicles and blogspam. Google is notoriously bad at this. It impossible to even get a decent result.

I found my solution by searching Reddit. It has its own problem, but it is easy to establish credibility by just looking at the user’s post history. Most useful information is hidden inside similar silos, unreachable by search engines.

The Silos

Last year, for my 30 days of Blog series, I wrote about Neeva, a subscription-only search product that claimed to compete with Google. To illustrate my point, I referenced a ReplyAll episode where the hosts spend countless hours trying to google a problem that was solved by a single Facebook search. This has been par for the course for the web in 2021. Most of the open web is full of spam, trash and SEO-hacks. Most of the useful information is hidden away in niche blogs without SEO, private subreddits, StackExchange and Facebook Groups. Data that is either not indexed, because the hosts either actively or passively block search bots, or is not prioritized by search engines. These platforms are also subject to spam, but overall you can find more reliable information here.

These forums are full of helpful people posting helpful information just to help someone. There are rarely any commercial interests involved, except for the platform it self. They are not optimizing for SEO, and using trick so Google(and Bing, which is what DDG uses as its backend) can deem them credible. Facebook deems Google its competitor and considers indexing of their content as a mistake. Facebook is now the biggest Deep Web archive.

Why did this happen?

Before the infamous Panda update, SEO was simple; stuff your site with key words, create spammy back-links and rise up in the ranks. What happened in 2011 is Google added more factors to how they rank pages. This was the infamous Panda Update. This was done, if we take Google’s word at face value, to counter the spam ranking on their results pages. It was a counter to the Content Farm. This killed the search traffic for thousands of specialized phpBB and vBulletin forums. Unlike the info-silos of today, they did not block search robots from indexing their content. There were also similarly non-spammy sources that lost search traffic, hence ad-based revenue.

Consequently, what Google did when they prioritized ‘credibility’ via site content, they raised the cost of SEO. Now you have to have a ‘content-writer’ that will give you appropriate, SEO-friendly content, either on staff, or have freelancers on rotation. The content that google can repackage as an Instant Answer. You might be wondering, how is this any more credible than before? Well it is not. You do not have to provide anything of value to the user. The information provided is probably not written by an expert. It is probably full of affiliate links, 500MB of JavaScript code that tracks you across the web, and ads that slow your whole computer down(remember when we got rid of Flash for that?). It is just as useless as the keyword-stuffed scam site from 2003, but with better grammar and you don’t have to leave Google for it. The irony of the update is that while ‘defeating’ content farms, Google turned everyone into a content farm.

What is Google doing to fix this?

My hypothesis is that this is by design. I am a cynical person by nature and I think Google broke organic search because devaluing organic results would make paid results less useless by comparison. And when you spend more time on Google, you are more likely to click on an ad. This may sound like a conspiracy theory, but there is a storied history of technology companies making their products less effective for financial gain. And as goes google, so goes Bing. I know Bing is a joke is the mainstream zeitgeist, but it is pretty much indistinguishable from Google.

So what?

Well the promise of the web, and the internet as a whole, was that information would be able to roam free. You could access any idea ever published. Given that there are 400 Million active websites now, so a functional search engine is important to sift through. In his now classic essay, Search is too important to be limited to one company, Cory Doctorow, wrote:

Search is the beginning and the end of the internet. Before search, there was the idea of an organised, hierarchical internet, set up along the lines of the Dewey Decimal system.

The internet is converging towards the same commercial media model that existed before; information access is subject to either government censors or commercial interests. The promise of the internet might remain unfulfilled. We need an open web.

How to save the open web?

I have an idea that has been swirling inside my head, for a while, of a federated, peer-to-peer search index and distributed computing search engine that would be free of commercial interest. Will it take over Google? Probably not, but we have the means to build a completely free search engine. More on that later.

Google, either by design or through incompetence, have killed Search. Most results are spammy and provide very little value to users. Most useful information has been siloed is SEO free or unindexed silo. The solution might be to build a search engine of our own.