This blog is part of my 38 before 38 series. I write a blog for every single day for the 38 days leading up to my 38th Birthday.

In these series I have insisted that LLMs such as GPT4, Claude, etc. are not “AI”. In more accurate terms, they are not any sort of approximation of human intelligence. However there is one vein of argument against my position that I find very compelling. It is related to how machines are seen as analogues of their natural counterparts. How those machines end up being designed in practice.

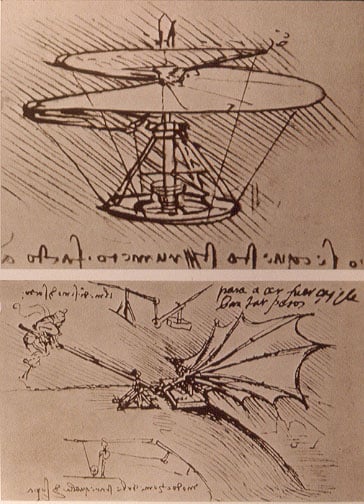

Flying High

All the earliest ideas of a flying machines had one thing in common. They all wanted to replicate bird wings or kites. These designs did lead to some successful flight. Namely hot-air balloons and air ships. But how we really achieved flight was through Aeroplanes(or Airplanes).

Besides the basic silhouette, there is very little in common between a bird and a plan. An modern airliner is not flapping its wings to keep a light body full of hollow bones afloat. Instead it uses combustion fuel power to propel itself, and tons of passengers and cargo, through the air.

The plane does flight faster and for longer distance than a bird. The flight achieved through industrial methods surpasses nature. We might have something similar for the task of “Thinking”.

Words are all I have

Language is at the center of how humans transmit, receive, and synthesize information. It is what we use to reason, to plead, to pray, and to negotiate. So the goal of an “intelligent” machine is to create human-like language. Instead of mimicking a human brain and in turn, pattern recognition, it would just be a conversation engine.

Sounds a lot like ChatGPT, doesn’t it? As discussed yesterday, these models work by taking a word or sequence of words as input and then predicts what the next words would be. It is not a living, thinking being making connections between disparate ideas.

But the end result is no different than what that living, thinking being would produce. So how is this not Intelligence?

So Why is it not?

While this argument is compelling, it is ignoring one huge aspect of LLMs; they suck. To be less crass, they do not replicate human language. They are very good at reproducing the most repetitive mechanical forms of human communication. They can’t have a real conversation.

Not because they can’t. No, because there is no data for a human conversations to train these models. Most of the human language is not in blog posts, or novels, or emails. It is sweet nothings at night time. Fireside chats during camp. People talking about the latest gossip at family gatherings. None this exists as data. What exists are minutes of Board of Directors’ meetings, and StackOverflow threads on centering a div.